Tags

Breakthrough Findings, Citation Politics, Citation Practices, Climate science, Conformist Science, Covid Lockdowns, Disruptive Science, Mary Worley Montagu, Matt Ridley, NASA, Nature Magazine, Politicized Science, President Dwight Eisenhower, Public Health, Scientism, Scott Sumner, Steven F. Hayward, Wokeness

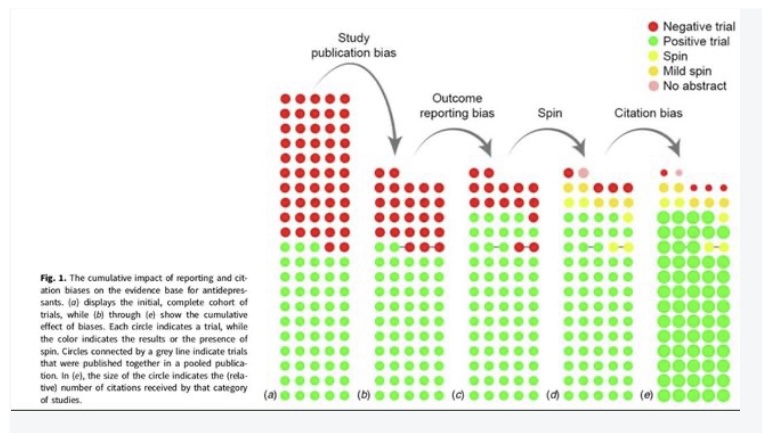

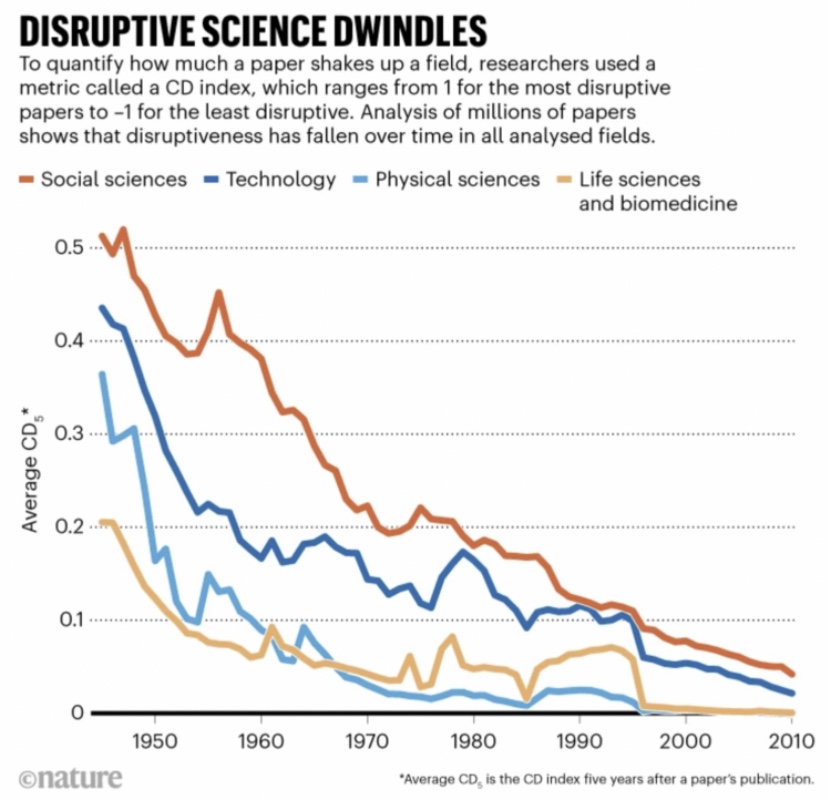

I’m not terribly surprised to learn that scientific advancement has slowed over my lifetime. A recent study published in the journal Nature documented a secular decline in the frequency of “disruptive” or “breakthrough” scientific research across a range of fields. Research has become increasingly dominated by “incremental” findings, according to the authors. The graphic below tells a pretty dramatic story:

The index values used in the chart range “from 1 for the most disruptive to -1 for the least disruptive.” The methodology used to assign these values, which summarize academic papers as well as patents, produces a few oddities. Why, for example, does the tech revolution of the last 40 years create barely a blip in the technology index in the chart above? And why have tech research and social science research always been more “disruptive” than other fields of study?

Putting those questions aside, the Nature paper finds trends that are basically consistent across all fields. Apparently, systematic forces have led to declines in these measures of breakthrough scientific findings. The authors try to provide a few explanations as to the forces at play: fewer researchers, incrementalism, and a growing role of large-team research that induces conformity. But if research has become more incremental, that’s more accurately described as a manifestation of the disease, rather than a cause.

Conformity

Steven F. Hayward skewers the authors a little, and perhaps unfairly, stating a concern held by many skeptics of current scientific practices. Hayward says the paper:

“… avoids the most significant and obvious explanation with the myopia of Inspector Clouseau, which is the deadly confluence of ideology and the increasingly narrow conformism of academic specialties.”

Conformism in science is nothing new, and it has often interfered with the advancement of knowledge. The earliest cases of suppression of controversial science were motivated by religious doctrine, but challenges to almost any scientific “consensus” seem to be looked upon as heresy. Several early cases of suppression are discussed here. Matt Ridley has described the case of Mary Worley Montagu, who visited Ottoman Turkey in the early 1700s and witnessed the application of puss from smallpox blisters to small scratches on the skin of healthy subjects. The mild illness this induced led to immunity, but the British medical establishment ridiculed her. A similar fate was suffered by a Boston physician in 1721. Ridley says:

“Conformity is the enemy of scientific progress, which depends on disagreement and challenge. Science is the belief in the ignorance of experts, as [the physicist Richard] Feynman put it.”

When was the Scientific Boom?

I couldn’t agree more with Hayward and Ridley on the damaging effects of conformity. But what gave rise to our recent slide into scientific conformity, and when did it begin? The Nature study on disruptive science used data on papers and patents starting in 1945. The peak year for disruptive science within the data set was … 1945, but the index values were relatively high over the first two decades of the data set. Maybe those decades were very special for science, with a variety of applications and high-profile accomplishments that have gone unmatched since. As Scott Sumner says in an otherwise unrelated post, in many ways we’ve failed to live up to our own expectations:

“In retrospect, the 1950s seem like a pivotal decade. The Boeing 707, nuclear power plants, satellites orbiting Earth, glass walled skyscrapers, etc., all seemed radically different from the world of the 1890s. In contrast, airliners of the 2020s look roughly like the 707, we seem even less able to build nuclear power plants than in the 1960s, we seem to have a harder time getting back to the moon than going the first time, and we still build boring glass walled skyscrapers.”

It’s difficult to put the initial levels of the “disruptiveness” indices into historical context. We don’t know whether science was even more disruptive prior to 1945, or how the indices used by the authors of the Nature article would have captured it. And it’s impossible to say whether there is some “normal” level of disruptive research. Is a “normal” index value equal to zero, which we now approach as an asymptote?

Some incredible scientific breakthroughs occurred decades before 1945, to take Einstein’s theory of relativity as an obvious example. Perhaps the index value for physical sciences would have been much higher at that time, were it measured. Whether the immediate post-World War II era represented an all-time high in scientific disruption is anyone’s guess. Presumably, the world is always coming from a more primitive base of knowledge. Discoveries, however, usually lead to new and deeper questions. The authors of the Nature article acknowledge and attempt to test for the “burden” of a growing knowledge base on the productivity of subsequent research and find no effect. Nevertheless, it’s possible that the declining pattern after 1945 represents a natural decay following major “paradigm shifts” in the early twentieth century.

The Psychosis Now Known As “Wokeness”

The Nature study used papers and patents only through 2010. Therefore, the decline in disruptive science predates the revolution in “wokeness” we’ve seen over the past decade. But “wokeness” amounts to a radicalization of various doctrines that have been knocking around for years. The rise of social justice activism, critical theory, and anthropomorphic global warming theology all began long before the turn of the century and had far reaching effects that extended to the sciences. The recency of “wokeness” certainly doesn’t invalidate Hayward and Ridley when they note that ideology has a negative impact on research productivity. It’s likely, however, that some fields of study are relatively immune to the effects of politicization, such as the physical sciences. Surely other fields are more vulnerable, like the social sciences.

Citations: Not What They Used To Be?

There are other possible causes of the decline in disruptive science as measured by the Nature study, though the authors believe they’ve tested and found these explanations lacking. It’s possible that an increase in collaborative work led to a change in citation practices. For example, this study found that while self-citation has remained stable, citation of those within an author’s “collaboration network” has declined over time. Another paper identified a trend toward citing review articles in Ecology Journals rather than the research upon which those reviews were based, resulting in incorrect attribution of ideas and findings. That would directly reduce the measured “disruptiveness” of a given paper, but it’s not clear whether that trend extends to other fields.

Believe it or not, “citation politics” is a thing! It reflects the extent to which a researcher should suck-up to prominent authors in a field of study, or to anyone else who might be deemed potentially helpful or harmful. In a development that speaks volumes about trends in research productivity, authors are now urged to append a “Citation Diversity Statement” to their papers. Here’s an academic piece addressing the subject of “gendered citation practices” in contemporary physics. The 11 authors of this paper would do well to spend more time thinking about problems in physics than in obsessing about whether their world is “unfair”.

Science and the State

None of those other explanations are to disavow my strong feeling that science has been politicized and that it is harming our progress toward a better world. In fact, it usually leads us astray. Perhaps the most egregious example of politicized conformism today is climate science, though the health sciences went headlong toward a distinctly unhealthy conformism during the pandemic (and see this for a dark laugh).

Politicized science leads to both conformism and suppression. Here are several channels through which politicization might create these perverse tendencies and reduce research productivity or disruptiveness:

- Political or agenda-driven research is driven by subjective criteria, rather than objective inquiry and even-handed empiricism

- Research funding via private or public grants is often contingent upon whether the research can be expected to support the objectives of the funding NGOs, agencies, or regulators. The gravy train is reserved for those who support the “correct” scientific narrative

- Promotion or tenure decisions may be sensitive to the political implications of research

- Government agencies have been known to block access to databases funded by taxpayers when a scientist wishes to investigate the “wrong questions”

- Journals and referees have political biases that may influence the acceptance of research submissions, which in turn influences the research itself

- The favorability of coverage by a politicized media influences researchers, who are sensitive to the damage the media can do to one’s reputation

- The influence of government agencies on media treatment of scientific discussion has proven to be a potent force

- The chance that one’s research might have a public policy impact is heavily influenced by politics

- The talent sought and/or attracted to various fields may be diminished by the primacy of political considerations. Indoctrinated young activists generally aren’t the material from which objective scientists are made

Conclusion

In fairness, there is a great deal of wonderful science being conducted these days, despite the claims appearing in the Nature piece and the politicized corruption undermining good science in certain fields. Tremendous breakthroughs are taking place in areas of medical research such as cancer immunotherapy and diabetes treatment. Fusion energy is inching closer to a reality. Space research is moving forward at a tremendous pace in both the public and private spheres, despite NASA’s clumsiness.

I’m sure there are several causes for the 70-year decline in scientific “disruptiveness” measured in the article in Nature. Part of that decline might have been a natural consequence of coming off an early twentieth-century burst of scientific breakthroughs. There might be other clues related to changes in citation practices. However, politicization has become a huge burden on scientific progress over the past decade. The most awful consequences of this trend include a huge misallocation of resources from industrial planning predicated on politicized science, and a meaningful loss of lives owing to the blind acceptance of draconian health policies during the Covid pandemic. When guided by the state or politics, what passes for science is often no better than scientism. There are, however, even in climate science and public health disciplines, many great scientists who continue to test and challenge the orthodoxy. We need more of them!

I leave you with a few words from President Dwight Eisenhower’s Farewell Address in 1961, in which he foresaw issues related to the federal funding of scientific research:

“Akin to, and largely responsible for the sweeping changes in our industrial-military posture, has been the technological revolution during recent decades.

In this revolution, research has become central; it also becomes more formalized, complex, and costly. A steadily increasing share is conducted for, by, or at the direction of, the Federal government.

Today, the solitary inventor, tinkering in his shop, has been over shadowed by task forces of scientists in laboratories and testing fields. In the same fashion, the free university, historically the fountainhead of free ideas and scientific discovery, has experienced a revolution in the conduct of research. Partly because of the huge costs involved, a government contract becomes virtually a substitute for intellectual curiosity. For every old blackboard there are now hundreds of new electronic computers.

The prospect of domination of the nation’s scholars by Federal employment, project allocations, and the power of money is ever present and is gravely to be regarded.

Yet, in holding scientific research and discovery in respect, as we should, we must also be alert to the equal and opposite danger that public policy could itself become the captive of a scientific-technological elite.”