Tags

AIRS, Albedo, Axial Tilt, Diurnal Temperature Range, Eccentricity, global warming, Insolation, Interglacial, Javier, Jim Steele, NASA, Obliquity, Paleoclimatolog, Roy Spencer, Satellite Temperatures, Urban Heat Islands

A few little-recognized facts about global warming are summarized nicely by climate researcher Javier in a comment on this post by Dr. Roy Spencer:

“It is mainly over land and not over sea. It is mainly in the Northern Hemisphere and not in the Southern Hemisphere. It is mainly during winter and not during summer. And it affects mainly minimal (night) temperature and not maximal (day) temperature.”

I added the hyperlinks to Javier’s comment. The last two items on his list emphasize a benign aspect of the warming we’ve experienced since the late 1970s. After all, cold temperatures are far deadlier than warm temperatures.

Here is a disclaimer: my use of the term “global warming” refers to the fact that averages of measured temperatures have risen in a few fits and starts over the past four decades. I do not use the term to mean a permanent trend induced by human activity, since that time span is very short in climatological terms, and the observed increase is well within the historical range of natural variation.

Few seem aware that the surface temperature record is plagued by an obvious issue: the siting of most weather stations in urban environments. In fact, urban weather stations account for 82% of total stations in the U.S., as Jim Steele writes of “Our Urban ‘Climate Crisis’“. Temperatures run hot in cities due to the heat-absorbing characteristics of building materials and the high proportion of impervious ground cover. And some stations well outside of metropolitan areas are also situated near concrete and pavement. There is little doubt that urbanization and thoughtless siting decisions for weather stations have corrupted temperature measurements and exaggerated surface warming trends.

Hot summer days always arouse expressions of climate alarm. However, increases in summer temperatures, and daytime temperatures, have been relatively modest compared to increases in winter and nighttime temperatures. In Roy Spencer’s post, (also linked above), he reports that 80% of the U.S. warming observed by a NASA satellite system (AIRS) from September 2002 to March 2019 occurred at night.

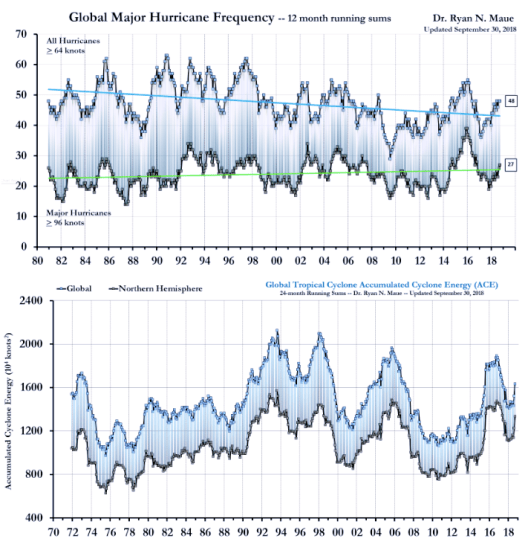

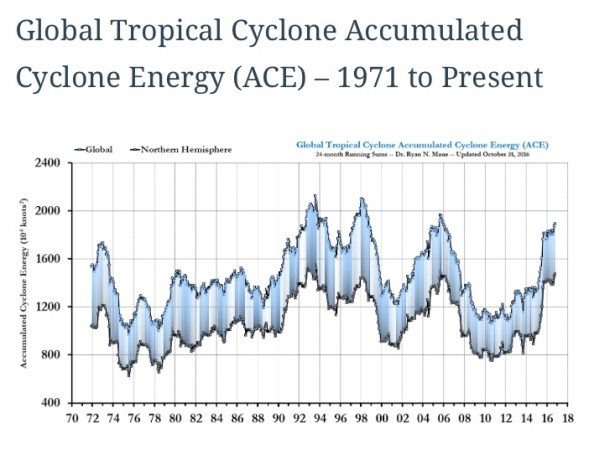

Of course, climate alarmists also claim that global warming makes temperatures more volatile. So, they argue, there are now more very hot days even if the change in the average summer temperature is modest. The facts do not support that claim, however. Indeed, the world has experienced less temperature volatility as global temperatures have risen. And less extreme weather, as it happens, is contrary to another theme in the warmest narrative.

There is some reason to believe that the relative increase in nighttime temperature is connected to the urban heat island effect. Pavement, concrete, and other materials retain heat overnight. Thus, increasing urbanization leads to nighttime temperatures that do not fall from their daily highs as much as they did a few decades back. The magnification of daytime heating is not as pronounced as the effect of retained heat overnight, which causes the diurnal temperature range to decrease. But I should note that some rural farmers insist that nighttime lows have increased relative to daytime highs there as well, and Roy Spencer himself is not confident that the satellite temperature data on which his finding was based reflects a strong urban heat island effect.

For perspective, it’s good to remember that we live in the midst of an interglacial period. These are relatively brief, temperate intervals between lengthier glacial periods (see here, and more from Javier here). The current interglacial is well advanced, having begun about 11,700 years ago, but Javier estimates that it could last for another 1,500 years. That would be longer than the historical average. At the peak of the last interglacial period, temperatures were about 2C higher than today and sea levels were 5 meters higher. The last interglacial ended about 120,000 years ago, but the historical average time between interglacials is only about 41,000 years. These low frequency changes in the global climate are generally driven by the Earth’s axial tilt (obliquity), recurring cycles in the shape of our eliptical orbit around the Sun (eccentricity), and the Earth’s solar exposure (insolation) and albedo.

Biased surface temperature records have both inspired and reinforced the sense of panic surrounding global warming. Few observers seem to understand the existence of a strong bias, let alone its source: the urban heat island effect. And few seem to realize that most of the warming we’ve experienced since the 1970s has occurred at night, not during the day, and that these changes are well within the range of natural variation. Dramatic climate change happens at both long and short time scales for reasons that are largely astronomical. The lengthy historical record accumulated by paleoclimatologists shows that current concerns over global warming are exaggerated. I’m quite confident that mankind will find ways to adapt to climate change in either direction, but some global warming might be beneficial once the next glacial period begins.