Tags

97% Consensus, AGW, Carbon Forcing Models, Climate Feedbacks, CO2 and Greening, East Anglia University, Hurricane Frequency, Judith Curry, Matt Ridley, NOAA, Paleoclimate, Peer Review Corruption, Ross McKitrick, Roy Spencer, Sea Levels, Steve McIntyre, Temperature Proxies, Urbanization Bias

It’s a great irony that our educated and affluent classes have been largely zombified on the subject of climate change. Their brainwashing by the mainstream media has been so effective that these individuals are unwilling to consider more nuanced discussions of the consequences of higher atmospheric carbon concentrations, or any scientific evidence to suggest contrary views. I recently attended a party at which I witnessed several exchanges on the topic. It was apparent that these individuals are conditioned to accept a set of premises while lacking real familiarity with supporting evidence. Except in one brief instance, I avoided engaging on the topic, despite my bemusement. After all, I was there to party, and I did!

The zombie alarmists express their views within a self-reinforcing echo chamber, reacting to each others’ virtue signals with knowing sarcasm. They also seem eager to avoid any “denialist” stigma associated with a contrary view, so there is a sinister undercurrent to the whole dynamic. These individuals are incapable of citing real sources and evidence; they cite anecdotes or general “news-say” at best. They confuse local weather with climate change. Most of them haven’t the faintest idea how to find real research support for their position, even with powerful search engines at their disposal. Of course, the search engines themselves are programmed to prioritize the very media outlets that profit from climate scare-mongering. Catastrophe sells! Those media outlets, in turn, are eager to quote the views of researchers in government who profit from alarmism in the form of expanding programs and regulatory authority, as well as researchers outside of government who profit from government grant-making authority.

The Con in the “Consensus”

Climate alarmists take assurance in their position by repeating the false claim that 97% of climate scientists believe that human activity is the primary cause of warming global temperatures. The basis for this strong assertion comes from an academic paper that reviewed other papers, the selection of which was subject to bias. The 97% figure was not a share of “scientists”. It was the share of the selected papers stating agreement with the anthropomorphic global warming (AGW) hypothesis. And that figure is subject to other doubts, in addition to the selection bias noted above: the categorization into agree/disagree groups was made by “researchers” who were, in fact, environmental activists, who counted several papers written by so-called “skeptics” among the set that agreed with the strong AGW hypothesis. So the “97% of scientists” claim is a distortion of the actual findings, and the findings themselves are subject to severe methodological shortcomings. On the other hand, there are a number of widely-recognized, natural reasons for climate change, as documented in this note on 240 papers published over just the first six months of 2016.

Data Integrity

It’s rare to meet a climate alarmist with any knowledge of how temperature data is actually collected. What exactly is the “global temperature”, and how can it be measured? It is a difficult undertaking, and it wasn’t until 1979 that it could be done with any reliability. According to Roy Spencer, that’s when satellite equipment began measuring:

“… the natural microwave thermal emissions from oxygen in the atmosphere. The intensity of the signals these microwave radiometers measure at different microwave frequencies is directly proportional to the temperature of different, deep layers of the atmosphere.“

Prior to the deployment of weather satellites, and starting around 1850, temperature records came only from surface temperature readings. These are taken at weather stations on land and collected at sea, and they are subject to quality issues that are generally unappreciated. Weather stations are unevenly distributed and they come and go over time; many of them produce readings that are increasingly biased upward by urbanization. Sea surface temperatures are collected in different ways with varying implications for temperature trends. Aggregating these records over time and geography is a hazardous undertaking, and these records are, unfortunately, the most vulnerable to manipulation.

The urbanization bias in surface temperatures is significant. According to this paper by Ross McKitrick, the number of weather stations counted in the three major global temperature series declined by more than 4,500 since the 1970s (over 75%), and most of those losses were rural stations. From McKitrick’s abstract:

“The collapse of the sample size has increased the relative fraction of data coming from airports to about 50% (up from about 30% in the late 1970s). It has also reduced the average latitude of source data and removed relatively more high altitude monitoring sites. Oceanic data are based on sea surface temperature (SST) instead of marine air temperature (MAT)…. Ship-based readings changed over the 20th century from bucket-and-thermometer to engine-intake methods, leading to a warm bias as the new readings displaced the old.“

Think about that the next time you hear about temperature records, especially NOAA reports on a “new warmest month on record”.

Data Manipulation

It’s rare to find alarmists having any awareness of the scandal at East Anglia University, which involved data falsification by prominent members of the climate change “establishment”. That scandal also shed light on corruption of the peer-review process in climate research, including a bias against publishing work skeptical of the accepted AGW narrative. Few are aware now of a very recent scandal involving manipulation of temperature data at NOAA in which retroactive adjustments were applied in an effort to make the past look cooler and more recent temperatures warmer. There is currently an FOIA outstanding for communications between the Obama White House and a key scientist involved in the scandal. Here are Judith Curry’s thoughts on the NOAA temperature manipulation.

Think about all that the next time you hear about temperature records, especially NOAA reports on a “new warmest month on record”.

Other Warming Whoppers

Last week on social media, I noticed a woman emoting about the way hurricanes used to frighten her late mother. This woman was sharing an article about the presumed negative psychological effects that climate change was having on the general public. The bogus premises: we are experiencing an increase in the frequency and severity of storms, that climate change is causing the storms, and that people are scared to death about it! Just to be clear, I don’t think I’ve heard much in the way of real panic, and real estate prices and investment flows don’t seem to be under any real pressure. In fact, the frequency and severity of severe weather has been in decline even as atmospheric carbon concentrations have increased over the past 50 years.

I heard another laughable claim at the party: that maps are showing great areas of the globe becoming increasingly dry, mostly at low latitudes. I believe the phrase “frying” was used. That is patently false, but I believe it’s another case in which climate alarmists have confused model forecasts with fact.

The prospect of rising sea levels is another matter that concerns alarmists, who always fail to note that sea levels have been increasing for a very long time, well before carbon concentrations could have had any impact. In fact, the sea level increases in the past few centuries are a rebound from lows during the Little Ice Age, and levels are now back to where the seas were during the Medieval Warm Period. But even those fluctuations look minor by comparison to the increases in sea levels that occurred over 8,000 years ago. Sea levels are rising at a very slow rate today, so slowly that coastal construction is proceeding as if there is little if any threat to new investments. While some of this activity may be subsidized by governments through cheap flood insurance, real money is on the line, and that probably represents a better forecast of future coastal flooding than any academic study can provide.

Old Ideas Die Hard

Two enduring features of the climate debate are 1) the extent to which so-called “carbon forcing” models of climate change have erred in over-predicting global temperatures, and 2) the extent to which those errors have gone unnoticed by the media and the public. The models have been plagued by a number of issues: the climate is not a simple system. However, one basic shortcoming has to do with the existence of strong feedback effects: the alarmist community has asserted that feedbacks are positive, on balance, magnifying the warming impact of a given carbon forcing. In fact, the opposite seems to be true: second-order responses due to cloud cover, water vapor, and circulation effects are negative, on balance, at least partially offsetting the initial forcing.

Fifty Years Ain’t History

One other amazing thing about the alarmist position is an insistence that the past 50 years should be taken as a permanent trend. On a global scale, our surface temperature records are sketchy enough today, but recorded history is limited to the very recent past. There are recognized methods for estimating temperatures in the more distant past by using various temperature proxies. These are based on measurements of other natural phenomenon that are temperature-sensitive, such as ice cores, tree rings, and matter within successive sediment layers such as pollen and other organic compounds.

The proxy data has been used to create temperature estimates into the distant past. A basic finding is that the world has been this warm before, and even warmer, as recently as 1,000 years ago. This demonstrates the wide range of natural variation in the climate, and today’s global temperatures are well within that range. At the party I mentioned earlier, I was amused to hear a friend say, “Ya’ know, Greenland isn’t supposed to be green”, and he meant it! He is apparently unaware that Greenland was given that name by Viking settlers around 1000 AD, who inhabited the island during a warm spell lasting several hundred years… until it got too cold!

Carbon Is Not Poison

The alarmists take the position that carbon emissions are unequivocally bad for people and the planet. They treat carbon as if it is the equivalent of poisonous air pollution. The popular press often illustrates carbon emissions as black smoke pouring from industrial smokestacks, but like oxygen, carbon dioxide is a colorless gas and a gas upon which life itself depends.

Our planet’s vegetation thrives on carbon dioxide, and increasing carbon concentrations are promoting a “greening” of the earth. Crop yields are increasing as a result; reforestation is proceeding as well. The enhanced vegetation provides an element of climate feedback against carbon “forcings” by serving as a carbon sink, absorbing increasing amounts of carbon and converting it to oxygen.

Matt Ridley has noted one of the worst consequences of the alarmists’ carbon panic and its influence on public policy: the vast misallocation of resources toward carbon reduction, much of it dedicated to subsidies for technologies that cannot pass economic muster. Consider that those resources could be devoted to many other worthwhile purposes, like bringing electric power to third-world families who otherwise must burn dung inside their huts for heat; for that matter, perhaps the resources could be left under the control of taxpayers who can put it to the uses they value most highly. The regulatory burdens imposed by these policies on carbon-intensive industries represent lost output that can’t ever be recouped, and all in the service of goals that are of questionable value. And of course, the anti-carbon efforts almost certainly reflect a diversion of resources to the detriment of more immediate environmental concerns, such as mitigating truly toxic industrial pollutants.

The priorities underlying the alarm over climate change are severely misguided. The public should demand better evidence than consistently erroneous model predictions and manipulated climate data. Unfortunately, a media eager for drama and statism is complicit in the misleading narrative.

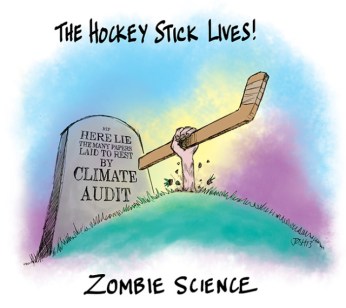

FYI: The cartoon at the top of this post refers to the climate blog climateaudit.org. The site’s blogger Steve McIntyre did much to debunk the “hockey stick” depiction of global temperature history, though it seems to live on in the minds of climate alarmists. McIntyre appears to be on an extended hiatus from the blog.